24/011

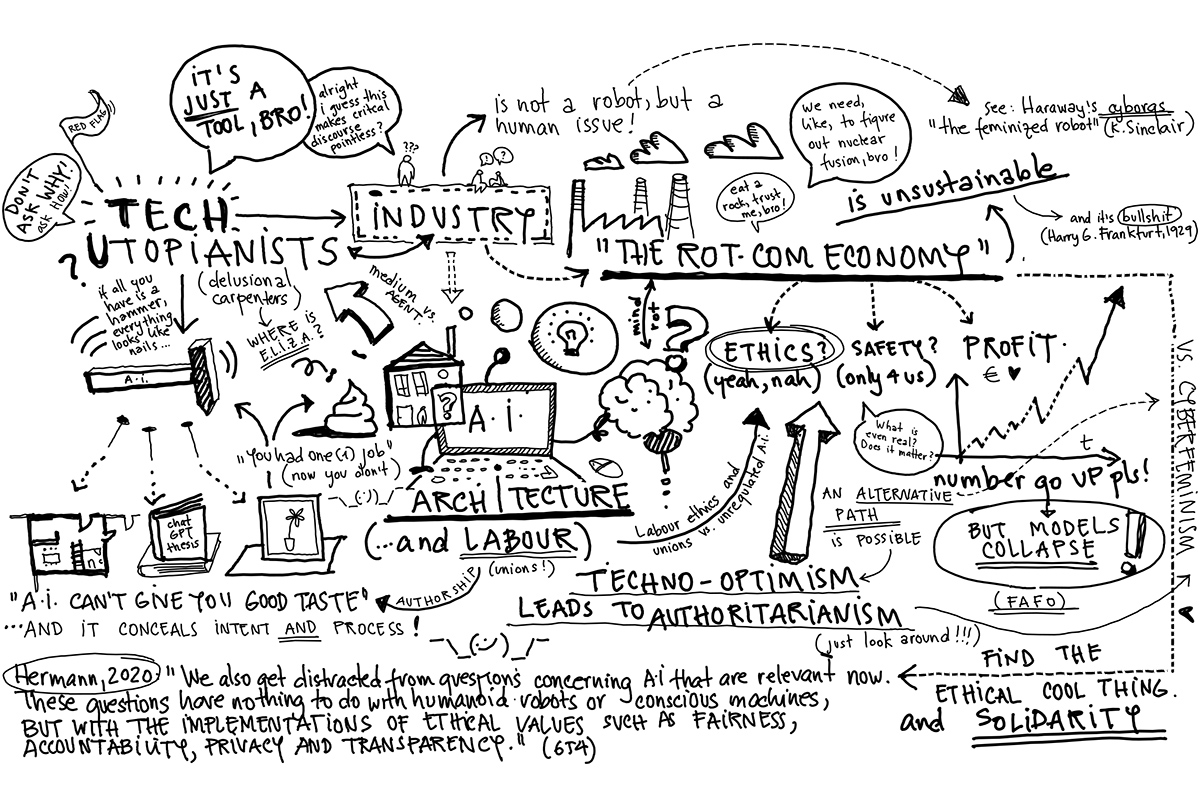

AI in Architecture: Awe and Autophagia

An Opinion Piece by Monica Tușinean

«The algorithm is not only prone to corruption – it was never innocent to begin with.»

«The algorithm is not only prone to corruption – it was never innocent to begin with.»

«The algorithm is not only prone to corruption – it was never innocent to begin with.»

«The algorithm is not only prone to corruption – it was never innocent to begin with.»

«The algorithm is not only prone to corruption – it was never innocent to begin with.»

A cold open

As I write this, a tiny robot inside my computer, a distant relative of Clippy, is monitoring my input and output. I have used AI aides to control and correct my writing, check for accidental plagiarism, and occasionally receive suggestions for reformulating paragraph-long sentences. While acknowledging its interventions, I never accept the corrections, as I am rather fond of rambling and malaphor.

All this is to say that what follows is a loose summary of contributions to the discourse and partial critique of some AI-related phenomena, but that I am not against the tool. I do, however, have significant reservations regarding the methods that are being employed by users and, more significantly, the industries inserting these products into every aspect of our personal and professional lives with little regard for safety or ethical business practices [Basal, 2024].

On Technoutopianists

Some of the people one should trust least in our profession are techno-utopianists [Monbiot, 2022]. The term is not to be mistakenly applied to colleagues, practitioners and scholars alike, who welcome and appreciate the benefits AI-driven conceptual utensils bring —far from it— but to those who, lacking anything except their digital hammers, see nothing but nails.

These delusional digital carpenters, experts as they may be, tend to perpetuate dangerous practices and even more concerning narratives, not because they genuinely believe in the cause (although this is a sliding and blurry delineation of borders) but because their symbolic and financial capital depends on it. This goes for a lot of specialists, as well as for the cultural avantgardes. Mysteriously, all critical positions stop exactly where their comfort zones begin, and equally baffling, at the intersection with their stakeholders’ or beneficiaries’ interests. I myself have to continuously reflect on this, as all of us, regardless of affiliation and area of expertise, would be well advised to do.

This essay aims to contextualise an architect's perspective, as a gap begs to be filled between the aforementioned techno-utopianists and the adepts of the so-called old school. The latter, unfortunately, have had little to contribute except variations on "it’ll take our jobs but will never truly replace us, so I’m not engaging with it”, which sheds a poor light on the rest of us sceptics.

As this text will, by its very nature, be non-exhaustive, readers are asked to click on the cited sources before short-circuiting (or hallucinating) and to keep in mind that this might be little more than an obsolete amalgamation of online resources and lukewarm takes in six months' time. The input gathered spans back a few years, and it has taken a while to put anything to paper (my apologies to Kontextur’s editor-in-chief) because the feeling that any expression of awe, interest, or concern might be rendered obsolete by the increasing acceleration of technological advancements and the insights that follow them.

What has nonetheless crystallised over time will be shared. The rest is archived and ripe for LLMs to pluck and make bullshit [Hicks et al., 2024] pies with.

Architecture and AI

Pertaining to architecture, four fields emerge where AI and its implications should be considered:

The first relates to tech industry practices and the conditions of production. One of the most extensive critiques of the AI industry is that it feeds into and enables a toxic narrative of perpetual growth, unchecked, unregulated, inequitable and destructive, and which, trickle-down lies be damned, only benefits the ones who do not, in fact, produce anything of value.

The rot-com economy seeps into architectural practice without us being aware of what, for instance, OpenAI’s Sam Altman’s intentions are, or that the tech industry is a prime example of a profession on the path to autophagy.

The second aspect is epistemic, or, to put it less pretentiously, relating to information and knowledge creation. This is perhaps the area most prone to discord because creativity is a vague term, and its emergence is, for the most part, tacit, non-explicit and hard to fixate into a set of codes and formal strategies. And granted, in a world that is quite literally burning, to a great degree because of our collective professional contributions to the climate emergency, one might argue that cultural concerns centred around architectural aesthetic solutions or even good taste might be frivolous. Of course, one would be catastrophically wrong.

A third issue connects to labour: job security for architectural workers, future-proof education, copyright infringement, and how techno capital might exacerbate already exploitative practices in the profession. The conversation around work equity, hierarchies, and forms of capital has barely just started gaining public momentum in architecture, and AI adds a highly volatile compound to the mix.

Ultimately, and perhaps most troubling, sustainability poses the most pressing issue: considering the enormous energetic toll of tech, adding another guzzler to the mix and selling it as a friend and helper should perhaps raise a tentative alarm. Architects are only just starting to acknowledge not only their contribution to the climate emergency but also the magnitude of the interrelated factors – from extraction to production to life cycles to CO2 emissions, you name it – that continue to take a toll on the environment. For a profession so apparently (read: performatively) preoccupied with sustainability, some of us were awfully quick to add another box to our cognitive dissonance pile.

But first things first (I have turned off my robot editor, so you’re stuck here with me; apologies if the introduction has dragged on a bit): my main concern, and perhaps the aspect that sets off a more emotional response, is that many conversations around AI are being silenced immediately by people lazily and condescendingly chanting the "it is only a tool" mantra [Tan, 2023].

Of course, nobody in their right mind who is even slightly tech-literate will claim that AI is some magical evil entity which has gained consciousness [Hicks et al., 2024] and will inevitably lead humanity towards destruction. Humans are doing an excellent job at that, and anyone who has engaged with the machine will know that it is, in fact, little more than a pseudo-eloquent dummy (much like yours truly). In the words of my 9th-grade C++ teacher, Mr. Pitic: "Remember, children, the computer is an idiot. It will only do whatever you tell it to".

Entanglements in Techno-ethics

Keller Easterling’s observation hovers above all of this: "Once taken up, no concept tool remains innocent for long" [Frichot, 2019]. This is precisely where pitfalls lurk and why techno-utopianists are inherently unreliable. While the factual expertise of architects who claim to be most proficient in digital practices can not be questioned (not by me, at least), their intentions should be open to intense scrutiny. If one builds a career around something, chances are that one will likely die of confirmation bias on a self-manufactured hill [Klein, 2023].

If you ask the cynics, this is poor scientific practice at best, and cult-like behaviour [Evans, 2024] fuelled by sunken cost fallacies at its worst. Either way, if expertise is not employed to critically interrogate the domain from which it emerged, it is absolutely useless. Technology is Janus-faced, and history has proven this in more instances than can be named here, but even if the coin flips and falls randomly (which it does not), the risk of it landing on the wrong side should be averted at all costs.

In an excellent piece for „Rolling Stone“ magazine published earlier this year, Robert Evans elaborates on the tech cult’s propensity to employ faulty and indeed dangerous methods without the slimmest consideration for users’ well-being as long as it generates profit [Evans, 2024]. This trend is probably most noticeable in Google’s steady decline in quality: venture capital is no longer interested in creating good products, and its main prerogative is to, in Ed Zitron’s parlance, "Make number go up" [Zitron, 2024].

This will become an issue with software that architects use as well, and the people spearheading this industry are not exempt from being unbiased; their jobs, first and foremost, are to convince shareholders that their software will remain profitable.

One cannot (and should not) detach the humans leading these companies from their work. Adobe VP Alexandru Costin was urged during a panel discussion to consider the "direct harm algorithmic bias does to marginalised communities." Costin claimed that "the biggest risk with generative AI wasn’t fraud or plagiarism, but failing to use it" [Evans, 2024]. This answer should give one pause.

We also cannot (and should not) trust that AI tools will be designed to be used fairly or that they will help us create or implement better designs, workflows, you name it, when it has become evident across the tech industry that this is not where their creators’ priorities lie [Hutson, 2016].

The algorithm is not only prone to corruption – it was never innocent to begin with.

The subversion of an initial positive intent is perhaps unavoidable, as most architects are far from tech-literate. The speed with which we are required to produce work prohibits most of us from truly investing our resources into learning how to use the devices at our disposal, leaving us naively hoping that we will eventually, organically and intuitively, figure it out somehow.

The answer should, instead, be found in software and methodologies developed not only by and with architects with significant proficiency in the field but also by those with a track record of critical reflection on the issue and who acknowledge its limitations.

A recent Baunetz Campus interview with Sandra Baggermann and Cas Esbach promises such an approach while rightly tackling the (albeit fluctuating) boundaries of AI-aided design practices. Most importantly, however, they address the common fallacy that AI is inherently creative and conducive to a widening of imaginative horizons:

“AI tools in architecture undermine creativity without users realising it. These tools give a false sense of power. Designers spend a lot of time customising prompts and settings, only to end up with designs that resemble well-known mainstream architectural works. The real danger lies in the subtlety of this effect. The designers believe they are exercising their creativity, but they are unconsciously constrained by the limited and biased training data of the AI” [Radulescu, 2023].

Nonetheless, some reservations remain that, as is par for the course in tech, positive developments are perpetually at risk of being tainted by the greasy hand of capitalism [Marantz, 2024]. Technical prowess aside, one would also love to see users, as well as devs, take the occasional ethics of technology course, particularly in cases where software companies grant architecture schools tens of millions of euros to fund AI research institutes named after them [TU München, 2020].

Speaking of names, perhaps, calling it AI was the first mistake (or a very clever, if devious, marketing tactic), as it is a misnomer that fosters the idea that what has been created is more creature than a tool and, as Tyler Austin Harper writes for "The Atlantic", invites us to put more trust in it than we ought to, thus having us become not only dependent on it but effectively disabled by the algorithm and devoid of autonomy [Harper, 2024]. I do not entirely agree with this argument, as I don’ t believe that, for instance, losing the ability to orient myself in nature (or cities) without GPS maps amounts to tragic cognitive impoverishment. Many technologies are helpful - that is not up for debate here.

Suppose, however, one follows Harper’s argument to its logical conclusion. In that case, the real issue rears its ugly head again: the unquestioning belief that we as designers „need“ this miraculous tool might take root in our collective consciousness, render us incapable of questioning its power and makers‘ authority and prohibit us from demanding that it become better, useful to us, and made into what WE need it to be.

More Creativity Pitfalls

Self-described seasoned designers will, of course, claim that we are all still well able to judge if whatever the machines serve us is useful or of quality, despite the fact that a look around our cities should be proof enough that a significant number of architects are, indeed, barely able to identify whether their own authentic and original creations are any good. Even accepting that, as of now, well-educated and experienced architects could be fit enough to filter through the sludge spewed indiscriminately by machines we do not comprehend, one should consider that the baseline by which we evaluate design products might shift dramatically in the future.

Model collapse poses a real danger to the quality of AI-generated output, particularly affecting LLM and text-to-image GAI. In layperson’ s terms, if the software can only create by assembling data from preexisting collections and considering how much useless output is being churned out and fed back into the data pool, the unusable will eventually vastly outnumber the beneficial, and that generated results, fed with garbage, will inevitably spit out more of the same [Evans, 2023]. The AI thereby loses the capacity to, for instance, „understand how human language functions, and is no longer able to form coherent sentences". As "Wired" journalist Vauhini Vara muses, "One wonders whether, at that point, humans will still have the ability themselves" [Vara, 2023].

Extrapolating from this, we can assume that if we pour content mixed and remixed by an algorithm (which we cannot manipulate ourselves), into our internal reference collections, we will eventually no longer be able to reflect on whether AI output, or anything for that matter, holds any real value.

This sketches out the "shifting baseline syndrome" [Alleway, 2022] I hinted at a few paragraphs ago: designers will eventually have forgotten what good/sustainable/beautiful/(insert your criteria of choice here) architectures are, and blindly accept lesser products because we have lost the capacity to discern which is which. This may be observed in current aesthetic trends, fed by uncommented and unquestioned images made palatable for quick consumption through social media. As an aside, this phenomenon has been excellently analysed by Alex Murrel in "The Age of Average" [Murrell, 2023].

Come to think about it, the concern around databases filled with composted iterations of half-baked ideas has little to do with AI and everything to do with how we engage with them. Nonetheless, as technology promises to make everything faster and more efficient, nothing guarantees that this will only apply to the good.

Once more, this is the crux of the rot economy [Zitron, 2024]. If everything has to grow continuously, no matter the results or the effects on the environment and others, so-called progress will eventually become cancerous. It is not the technology that corrupts the user or product (because, lest we forget, it is not sentient) but venture capitalism.

Taste

"Taste" is not a term architects like to use in public discourse. Still, eventually, many of the aforementioned failures to determine and select for quality are tied not only to architectural education but also to matters of, you guessed it, good taste.

While I have occasionally argued that aesthetics may not take centre stage in architectural discourse, seeing as we have much more pressing issues, from the housing crisis to societal and climate collapse, it would be short-sighted and frankly disingenuous to remove this aspect from the conversation entirely, as it is, or so one would hope, one of the central drivers of design thinking. And before anyone emails me to rant or educate me on the issue, have the record show that I am using the word „taste“ as US Supreme Court justice Stewart defined it: "I know it when I see it."

"AI can’t give you good taste", Elisabeth Goodspeed claims, and I wholeheartedly agree: "Most AI images look like shit“, she laments. „AI "artists" are quick to lecture me that generative tools are improving every day and that what they spit out won’ t always look this way – I think that’s beside the point. What makes AI imagery so lousy isn’ t the technology itself but the cliché and superficial creative ambitions of those who use it. (...) AI image generation is essentially a truncated exercise in taste" [Goodspeed, 2024].

Essentially, we talk about taste as an issue of personal sensibility emerging from curated input, which, as Goodspeed argues, rarely occurs by accident and is instead a result of work beyond "cultured education or innate coolness". This archival labour, by which we select, sediment, access, and eventually create, is shortcircuited by AI, regardless of whether a database is carefully designed and supplemented with bespoke imagery or other information that fits its user’s particular creative language.

Some AI creators who work in and with architectural design will mention that the way to go is to control the input. In the case of architecture offices, this will often happen with designs already produced within the practices in question.

But as most creatives will attest, an essential tactic employed to revitalise creative thought is actually looking beyond what one knows toward vistas outside of one‘s specialised profession, cultural echo chambers, geographic location, et cetera.

Therein lies a paradox: AI can generate acceptable results when it draws from what the user already knows or considers worthy, but in the world outside of that... hic sunt dracones. Isn’t it indeed a shame that the creative openness promised by AI might ghettoise creativity, sequestering us into virtual gated communities and confining us to echo chambers?

The Ick

All modes of using AI, be it generating images from prompts I would have otherwise given myself, analysing other people’ s work, or hybridising my own design methods, have given me an uneasy feeling because creativity and intellectual pursuits seem filtered through something that is not only beyond my understanding but that, as mentioned above, definitely has absolutely no idea what it is doing.

Usually, this loss of control is enjoyable in creative work; it generates states of flow and unexpected manifestations of what would otherwise be confined to the realms of tacit knowledge, but AI made things explicit too quickly for comfort.

This, of course, is very intimately tied to my own bias regarding design methods and how I prefer to allow ideas to float into being by talking, doodling, drawing by hand, and making loose sketches, essentially by enabling the emergence of what Otto Paans dubbed "diaphanous" graphic output [Paans, 2024].

These ghosts seeping through the veil that separates my internal „database“ from the external world are pleasant, and their inexactitude is enriching and inviting. However, when they float out of the machine, they become uncanny and feel soiled and untrustworthy, because the process by which they were created is not only opaque, or unknowable, it is concealed.

I want AI to do my taxes and laundry [Babb, 2024] to help me write boring emails that, even if I had composed myself, would sound like a robot wrote them. I don’t want to invite it quite so uncurbed into my creative process: even the happier accidents felt hollow, devoid of texture, and, all my rants about abolishing architectural authorship aside, they did not feel like they belonged to me, even after I had transformed and adapted them according to my sensibilities and my expertise. Admittedly, this says something about the relation between myself and my work and betrays that I may not have yet effectively interrogated what has been drilled into me over the last twenty years: that in architecture, mastery and authorship reign supreme and go hand in hand.

Insidious Errors

Because I am nothing if not financially irresponsible and committed to the bit, I have bought an OpenAI subscription and fed the final draft of my doctoral dissertation into the machine. Astonishingly, it can explain my work better than I can articulate when put on the spot (I have often resorted to air-dropping people my abstract when asked about it at parties). I translated it and extracted segments to share. I asked it for faults and inconsistencies, and I had bona fide conversations with it about the research. The realisation was that, for one, I probably got plausibly intelligent answers because I intimately knew the source material and knew what to ask, and despite all of this, it eventually, inevitably, started hallucinating (I hate this word, would much prefer we replaced it with “talking shite”). What was genuinely disconcerting was that someone unfamiliar with the topic might not have caught on to the latter.

The subtlety of its errors is more alarming than AI telling users that eating a small rock a day would help their mineral intake or that a little bit of edible glue would make pizza cheese more cheesy [Williams, 2024]. We all know that a healthy grain of salt should accompany any interaction with them. Still, the fact that the robots bullshit [Hicks et al., 2024]. with such ease and eloquence and generate source literature that doesn’t exist makes me long for entire salt mines.

One would argue that clever AI-driven plagiarism checkers can thwart these types of transgressions, but as a recent case described by "The Guardian" [Topinka, 2024] shows, these tools have occasionally rung false alarms in universities when students’ writing was deemed suspicious due to the use of rare or bizarre terms or accidentally ill-sourced bibliographies.

While architects might be less concerned with plagiarism or de facto wrong information being sold to us as gospel, the inherent fallibility of the output and our ineptitude when it comes to identifying errors might one day have grim repercussions when mistakes in AI-generated texts for tender lead to grave mal praxis or accidents on construction sites. And perhaps it is worth repeating here that LLMs do not, in fact, understand what they are saying [Hanemaayer, 2022]. This intrinsic fault, coupled with most architects' inherent hubris, left unchecked, could indeed have serious consequences “in real life”.

Apropos…

Labour

Optimists and cynics alike should agree on one thing: that AI is, above all, a political issue. And that, as we are observing at its core, the technology isn’t democratic despite some developers’ and dreamers’ best intentions.

When listening to an architectural AI expert (whom I will not name, lest I invite defamation accusations) during a university lecture, a striking divide in attitudes became apparent. After a lengthy explanation of "how" the algorithms work, the expert mentioned that, in his view, most critics who claim that the tech could never surpass human aptitudes would be proven wrong. And he is without doubt right about this.

The problem, however, is that this is not the primary issue most AI agnostics are addressing today: what drives conversations is not the "how" but the "why". Some may argue that since AI is here to stay and will inevitably penetrate all aspects of our lives, focusing on its development is paramount, and the time to wonder why has long passed.

This opens up a false dichotomy in which supporters and sceptics occupy different and opposing positions in discourse, become polarised (and radicalised), and eventually fail to address structural shortcomings that impact everyone all the same.

When it comes to the dangers AI poses to many creative workers, the can of worms lies open and waiting for us to dig our hands into it. As architects, we can take some solace in the fact that our skills and mindsets ideally lend themselves to tackling wicked problems and that a degree of adaptability has always been necessary and, therefore, nurtured in the profession. This doesn’t mean, however, that everyone will be able to adapt.

When looking at the areas where AI is taking over at a staggering rate and where software such as Property Max and its cousins are being adopted by real estate developers and the architectural workers they employ, one might consider that AI will perhaps absolve designers of menial tasks, such as painstakingly "optimising" floor plans after criteria dictated more by norms and profit generation than common sense or spatial quality. The aforementioned AI expert matter-of-factly mentioned that such architects would eventually have to look for other jobs, while the ones who contribute "culturally significant" work would be safe from being replaced by robots.

This might be true, but we should not accept it without examining what it will mean for the profession. Specifically, it might gravely exacerbate the division of architects into two classes: the worthy creatives and the CAD monkeys [Walsh, 2024] —a classist categorisation that already exists, by the way.

History has also shown that technological innovations lauded as tools for the liberation of non-dominant groups have rarely done this. In "More Work for Mother: the Ironies of Household Technology" Ruth Schwatz Cowan illustrates how, while husbands were happily released from their duties in the household, technological advancements did not, in fact, grant women the same privileges. Household gadgets replaced men’s work, but middle-class women were now burdened with more, albeit novel, tasks that further bound them to the home. It is not a far reach to imagine how AI might create a similar situation in an already hierarchical and often exploitative profession.

Architectural designers, or at least the ones who did not have the opportunity to engage with AI in a meaningful way during their studies, might need to steer toward "prompt design", which, I will admit, is incredibly fun, but as I mentioned earlier, after an initial high, leaves one slightly dissatisfied, a feeling I would consider akin to a dopamine crash. I don’t exactly mind this practice, but I see a danger in it nonetheless – apart from imminent model collapse, the generated objects often lie to the viewer. This is concerning from an architectural education point of view, where students would be seduced by quick, shiny results and take them at face value, failing to recognise (or search for) what these designs lack. And thus shifts the baseline.

How long, then, before companies prefer to hire lower-paid workers instead of experienced designers whose tasks would encompass cleaning machine-generated imagery and making it „plausible“?

This is something that other professions already experience: graphic designers, illustrators, animators, and artists are all exposed to the very real effects of being de facto replaced by AI that often plagiarises their work. And it has become clear that devs do not care about these people in the slightest. In a piece for "The New Yorker", Kyle Chayka explains how AI is stealing from artists [Chayka, 2023] and how class action lawsuits are fighting windmills trying to contain this predatory practice.

Additionally, numerous young architects stay financially afloat by producing high-quality visualisations for other offices. While tools such as ReRender, on the one hand, level the playing field for other small bureaus who cannot afford professional renderings and do not have the time to acquire the skills (and the software) to produce those images themselves, it could be argued that they also remove a significant source of income from architects who rely on this side hustle. Putting a damper on the excessive production of this kind of architectural representation (pseudo-realistic, glossy, deceptive) would probably be a welcome endeavour, but I don’t trust that outsourcing it to AI will achieve this goal.

Either way, AI companies charge users and make good money while sourcing other creators’ work without crediting authors, much less paying them. This is not referencing, sampling, or remixing – it is theft, plagiarism [Chayka, 2023] and a labour rights issue all rolled up into one.

As a profession that still normalises unpaid work to appease clients and bosses, we are not impervious to how AI skews (and manipulates) our perception of the value of our labour. In an article for „New Scientist“, Chris Stokel-Walker reports on a research project that explored how the use of LLM chat bots influenced writers’ self-evaluation: twenty-eight per cent of them would accept a pay cut if they received help from AI. The researchers who conducted the study argue that this might indicate how much workers valued the support of AI rather than „their perception of how much less their finished work ought to cost due to diminished quality“, but the implications are still concerning. Walker suggests that „The basic unsubtle message here is that LLMs can make creative writing less specialised and thereby less valuable overall, and that will drive down demand power for any writer, regardless of whether they use an LLM“ [Stokel-Walker, 2024]. Replace writing with any other creative practice.

There we go.

There is some hope, however, that if we succeed in addressing some of the threats AI poses to the profession, we might make strides in other directions as well. Neil Patrick Walsh argues for "Archinect": "Creativity won’t protect architects from automation - but labour unions might" [Walsh, 2024] and urges us to take note of the WGA and SAG strikes, which earlier this year made significant strides toward protecting workers from being exploited or made redundant by AI. Walsh acknowledges how architects might profit from software tools that streamline processes, enable more transparent communication with peers, clients and contractors, and free up time for designers to think, create and "reclaim that time that we’ve been giving away for so long". However, for these positive effects to occur, architects need autonomy and sufficient power to avoid an "explosion in expected output from the profession without an increase in fees or perceived value".

All of this will require us to indeed think about "how" we want to work alongside our robot helpers, but it will not absolve us from profoundly interrogating „why“ we do any of it in the first place [Hanemaayer, 2022].

Sustainability

The last point I want to touch upon in this essay, albeit very briefly, is that AI processes consume gargantuan amounts of energy [Naughton, 2023]. This issue does not affect architecture exclusively but instead looms darkly over the entire artificial intelligence enterprise.

Elisabeth Kolbert asks: "How can the world reach net zero if it keeps inventing new ways to consume energy?" [Kolbert, 2024] – this is a problem that Altman has acknowledged himself, although with a little more levity (or carelessness): "We need fusion or we need, like, radically cheaper solar plus storage, or something, at a massive scale," he says, which would be fine and dandy if the Bulletin of Atomic Scientists hadn’t already estimated that we are 90 seconds to midnight [Mecklin, 2024], and if climate scientists hadn‘t been talking their mouths dry for years, warning that we are not going to be able to align to the 1,5-degree threshold.

As Kolbert puts it, these "obscene amounts of energy" now close the circle to why technoutopianists should not be trusted unconditionally in the conversation on how AI will affect architecture. Inevitably, they will claim that AI is imperative and necessary and that it will eventually offer a solution to the ever-evolving climate emergency." There’s a fundamental mismatch between this technology and environmental sustainability", states Alex de Vries, who in 2016 put together the Bitcoin Energy Consumption Index, which showcases numbers that are astounding, if not staggering by comparison to the cumulated emissions of combustion engines, for instance. Nevertheless, the paradox is evident and should not be left unaddressed and unpoliticised.

In lieu of wrapping this up…

All this being said, there is no satisfying conclusion to any of it, nor are there any solutions that will cover all issues, but one way to approach this all would be to stay critical and informed. We can collectively aim to subvert, manipulate, and appropriate what appears inescapable and deterministic [Nye, 2006] by talking to each other, to experts, to people whose opinions don’t overlap entirely with our own, and to follow Donna Haraway’s advice and "stay with the trouble".

After all, it is all we have.

Literature:

Abdul, G. (2024, May 30). Risk of extinction by AI should be global priority,. The Guardian.

Babb, T. (2024, May 16). Please, AI, Don’t Take Our Jobs, Take Our Tasks. The New Yorker.

Basal, T. (2024, June 15). The Apple-OpenAI Deal Puts AI Safety Under Even Greater Scrutiny. Forbes.

Evans, R. (2024, January 27). The Cult of AI Rolling Stone Magazine.

Goodspeed, E. (2024, February 28). AI Can’t Give You Good Taste. Itsnicethat.

Hanemaayer, A. (Ed.). (2022). Artificial intelligence and its discontents: critiques from the social sciences and humanities. Palgrave Macmillan.

Hicks, M. T., Humphries, J., & Slater, J. (2024). ChatGPT is bullshit. Ethics and Information Technology, 26(2)

Hutson, M. (2016, May 16). Can We Stop Runaway AI. The New Yorker.

Klein, N. (2023, August 5). AI Machines aren’t hallucinating - but their makers are. The Guardian.

Kolbert, E. (2024, September 3). The Obscene Energy Demands of AI. The New Yorker.

Kyle Chayka. (2023, October 2). Is AI Art Stealing From Artists. The New Yorker.

Lanier, J. (2024, January 3). How To Picture AI.

Marantz, A. (2024, November 3). Among the AI Doomsayers. The New Yorker.

Mecklin, J. (2024, January 23). A moment of historic danger: It is still 90 seconds to midnight. Bulletin of Atomic Scientists. https://thebulletin.org/doomsday-clock/current-time/

Monbiot, G. (2022, May 13). We need optimism – but Disneyfied climate predictions are just dangerous. www.theguardian.com/commentisfree/2022/may/13/optimism-climate-predictions-techno-polluters

Murrell, A. (2023, March 20). The Age of Average. https://www.alexmurrell.co.uk/articles/the-age-of-average

Naughton, J. (2023, December 23). Why AI is a disaster for the climate. The Guardian.

Paans, O. (2024). Nebula rasa: The diaphanous as generative stimulus in architectural design. Arts & Communication, 2(1), 1922. https://doi.org/10.36922/ac.1922

Radulescu, S. (2023, June 13). Artificial Anthropocene. Baunetz Campus. www.baunetz-campus.de/focus/artificial-anthropocene-8602743

Reisner, A. (2024, February 29). Generative AI Is Challenging a 234-Year-Old Law.

Evans, R. (2023, June 20). AI is Coming for Your Children. Shatter Zone. https://shatterzone.substack.com/p/ AI-is-coming-for-your-children

Ruiz, S. (2023, October 17). Technocapital Is Eating My Brains. Regress Studies.

Stokel-Walker. (2024, July 6). Writers Accept Lower Pay When They Use AI to Help With Their Work. New Scientist. www.newscientist.com/article/2434307-writers-accept-lower-pay-when-they-use- AI-to-help-with-their-work/

Tan, B. (2023, January 31). AI is Not ‘Just a Tool’. Medium.

The shifting baseline syndrome as a connective concept for more informed and just responses to global environmental change. (n.d.). People.

Topinka, R. (2024, February 13). The software says my student cheated using AI. They say they’re innocent. Who do I believe? The Guardian. www.theguardian.com/commentisfree/2024/feb/13/software-student-cheated-combat- AI

TU München. (2020, November 11). Neues Forschungsinstitut für Künstliche Intelligenz im Bauwesen. www.tum.de/aktuelles/alle-meldungen/pressemitteilungen/details/neues-forschungsinstitut-fuer-kuenstliche-intelligenz-im-bauwesen

Tyler Austin Harper. (2024, May 21). The Big AI Risk Not Enough People are Seeing. The Atlantic.

Vara, V. (2023, September 21). Confessions of a Viral AI Writer. The Atlantic.

Walsh, N. P. (2023, January 5). You, Me, and DALL-E: On the Relationship Between Architecture, Data, and Artificial Intelligence. Archinect.

Walsh, N. P. (2024, February 27). Creativity Won’t Protect Architects From Automation – But Labor Unions Might. Archinect.

Williams, T. (2024, May 27). Google goes viral after AI says to put glue on pizza, eat rocks.

Wong, M. (2024, February 16). OpenAI’s Sora Is a Total Mystery.

Zitron, E. (2024a). Better Offline. www.betteroffline.com

Zitron, E. (2024b, February 27). Software Has Eaten The Media. Where’s Your Ed.At. www.wheresyoured.at/the-anti-economy

Zitron, E. (2024c, March 6). The Rot-Com Bubble. Where’s Your Ed.At. www.wheresyoured.at/rotcombubble